27. Quiz: State-Value Functions

Quiz: State-Value Functions

In this quiz, you will calculate the value function corresponding to a particular policy.

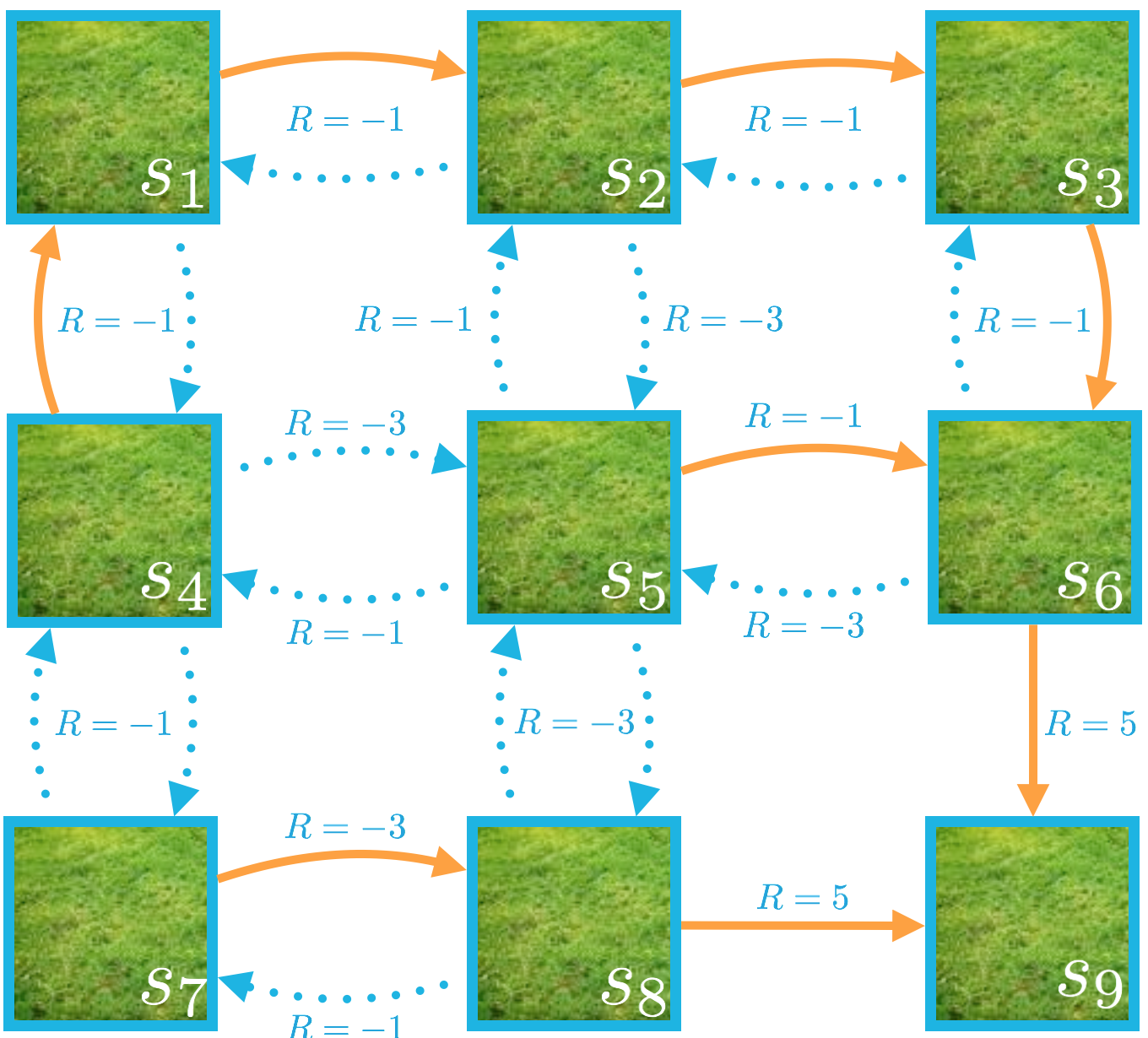

Each of the nine states in the MDP is labeled as one of \mathcal{S}^+ = {s_1, s_2, \ldots, s_9 } , where s_9 is a terminal state.

Consider the (deterministic) policy that is indicated (in orange) in the figure below.

The policy \pi is given by:

\pi(s_1) = \text{right}

\pi(s_2) = \text{right}

\pi(s_3) = \text{down}

\pi(s_4) = \text{up}

\pi(s_5) = \text{right}

\pi(s_6) = \text{down}

\pi(s_7) = \text{right}

\pi(s_8) = \text{right}

Recall that since s_9 is a terminal state, the episode ends immediately if the agent begins in this state. So, the agent will not have to choose an action (so, we won't include s_9 in the domain of the policy), and v_\pi(s_9) = 0.

Take the time now to calculate the state-value function v_\pi that corresponds to the policy. (You may find that the Bellman expectation equation saves you a lot of work!)

Assume \gamma = 1.

Once you have finished, use v_\pi to answer the questions below.

Question 1

What is v_\pi(s_4)?

SOLUTION:

1Question 2

What is v_\pi(s_1)?

SOLUTION:

2Question 3

Consider the following statements:

- (1) v_\pi(s_6) = -1 + v_\pi(s_5)

- (2) v_\pi(s_7) = -3 + v_\pi(s_8)

- (3) v_\pi(s_1) = -1 + v_\pi(s_2)

- (4) v_\pi(s_4) = -3 + v_\pi(s_7)

- (5) v_\pi(s_8) = -3 + v_\pi(s_5)

SOLUTION:

- (2)

- (3)